Prompt Engineering

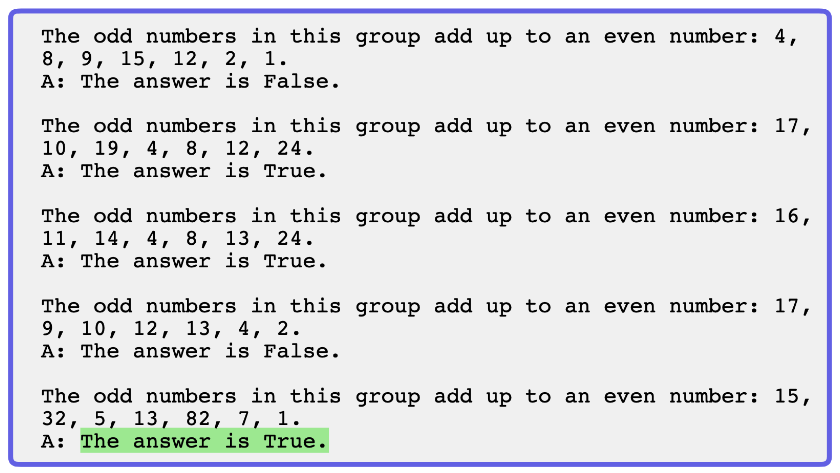

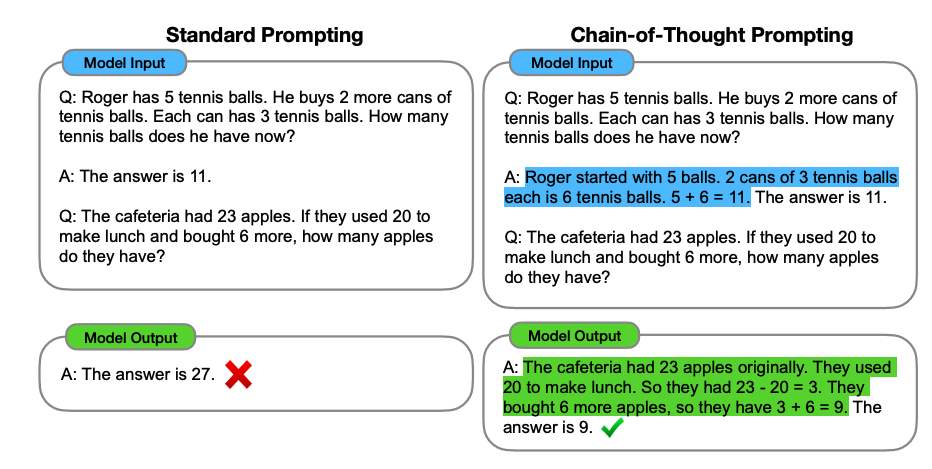

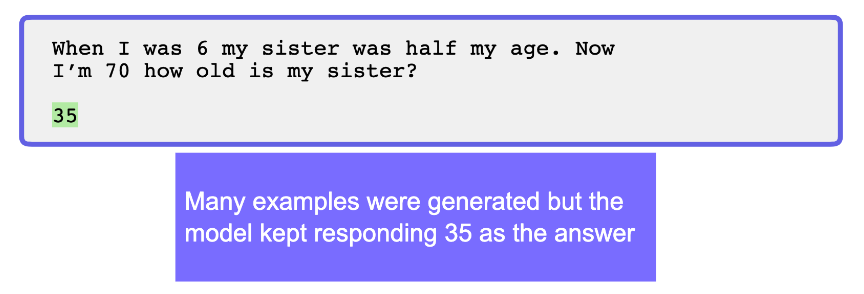

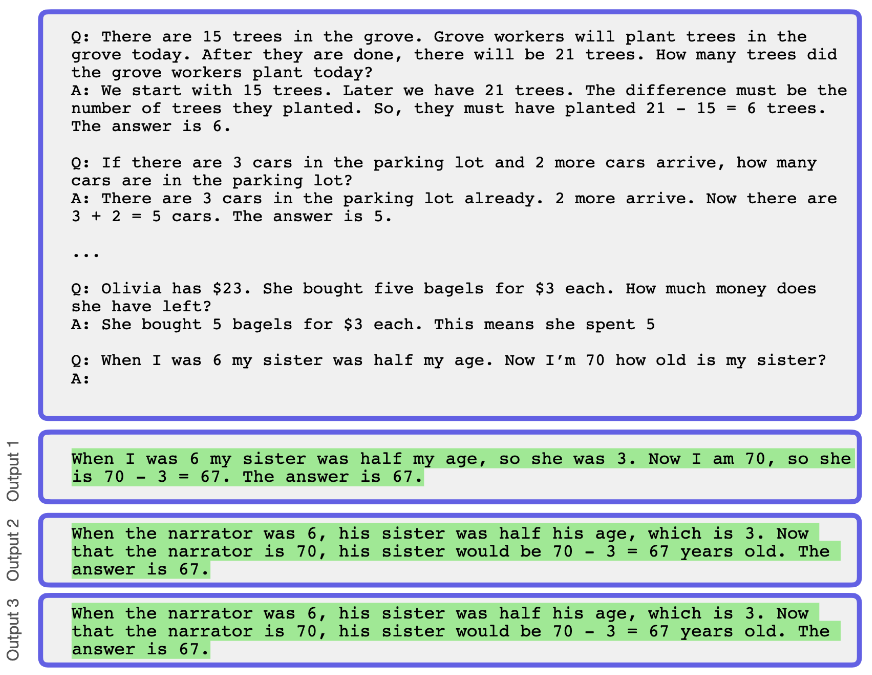

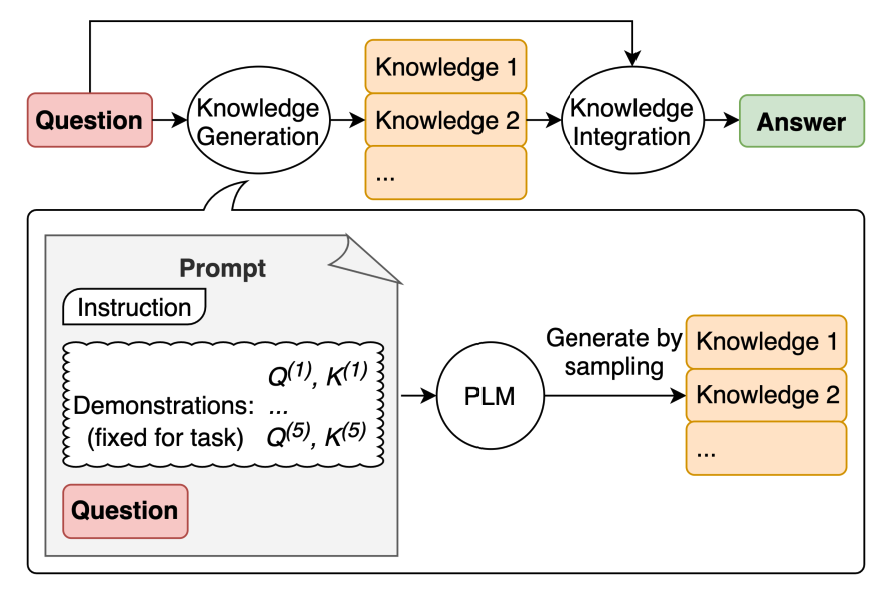

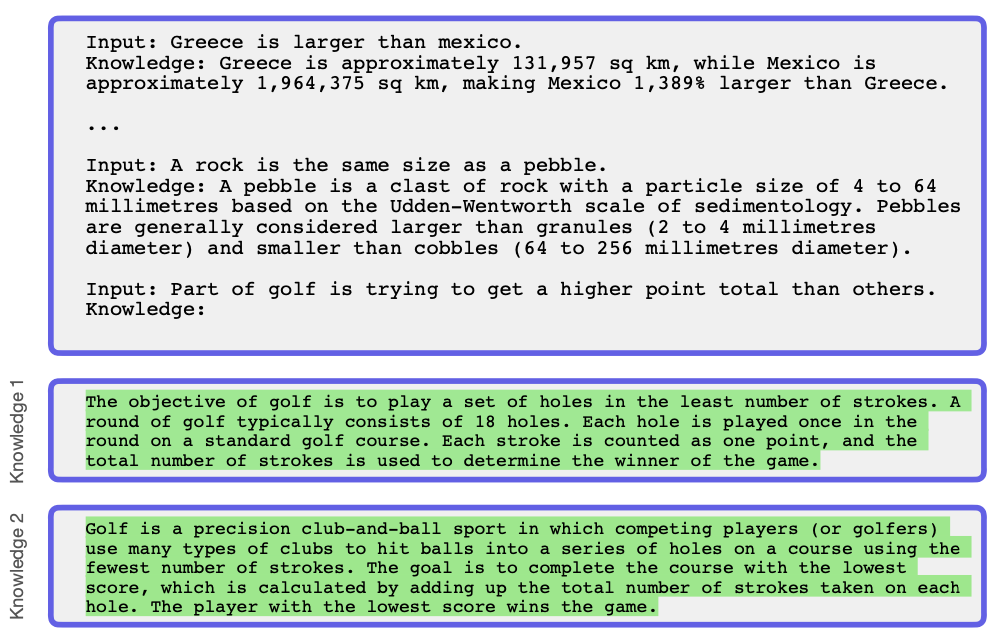

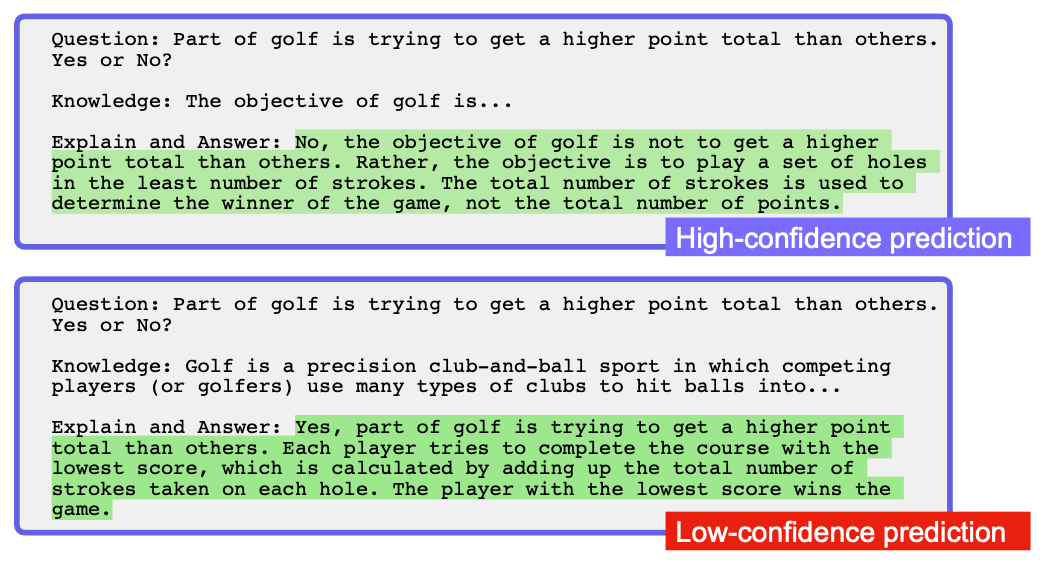

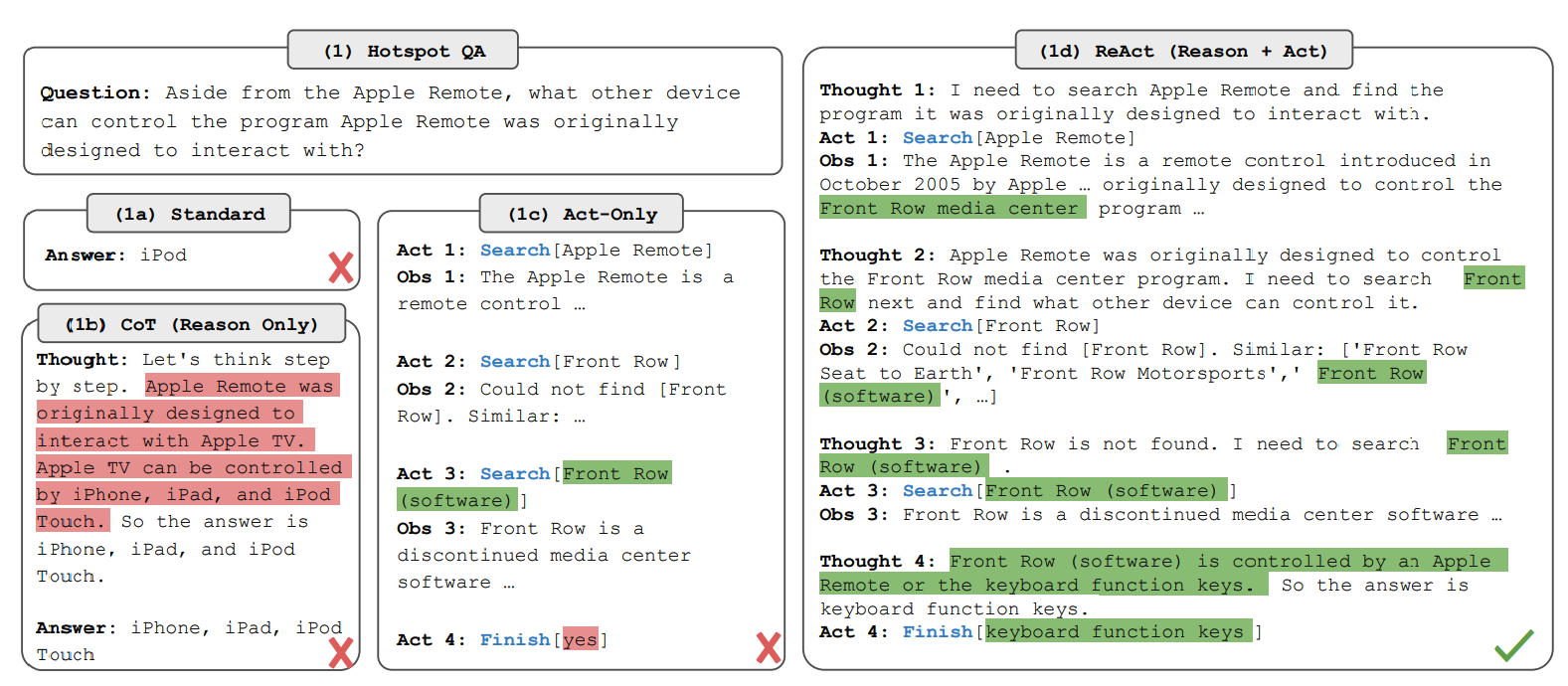

Prompt Engineering is a technique used in natural language processing (NLP) to design and create prompts to generate desired outputs from an NLP model. Prompt engineering is a technique used in natural language processing (NLP) to design and create prompts to generate desired outputs from an NLP model. It is used in various NLP applications, such as chatbots, question-answering systems, and language translation. Generated knowledge prompting and ReAct are two advanced techniques that improve the performance of large-scale language models and allow for greater synergy between reasoning and acting. Incorporating external knowledge also benefits commonsense reasoning. Prompt engineering is a technique used in natural language processing (NLP) that involves designing and creating prompts to generate desired outputs from an NLP model. In simpler terms, prompt engineering is crafting prompts or questions to get a specific response or answer from an AI model. Prompt engineering is used in various NLP applications, including chatbots, question-answering systems, and language translation. Developers can train AI models to respond accurately and appropriately to user queries by designing effective prompts, improving the overall user experience. Here are some of the critical steps involved in prompt engineering: There are different types of prompts that you can use in prompt engineering. Here are some of the most common types: Other than these, many advanced prompting techniques have been designed to improve performance on complex tasks. Which can be divided as: Few-shot prompts: Chain-of-thought (CoT) prompting: Prompting can be further improved by instructing the model to reason about the task when responding. This is very useful for jobs that require reasoning. You can combine it with few-shot prompting to get better results. You can also do zero-shot CoT where exemplars are not available. Chain-of-thought prompting enables large language models to tackle complex arithmetic, commonsense, and symbolic reasoning tasks. Chain-of-thought reasoning processes are highlighted. Self-Consistency: Self-Consistency aims to improve on the naive greedy decoding used in chain-of-thought prompting. The idea is to sample multiple, diverse reasoning paths through few-shot CoT and use the generations to select the most consistent answer. This helps to boost the performance of CoT prompting on tasks involving arithmetic and commonsense reasoning. Knowledge Generation Prompting: This technique involves using additional knowledge provided as part of the context to improve results on complex tasks such as commonsense reasoning. Incorporating external knowledge benefits commonsense reasoning while maintaining the flexibility of pre-trained sequence models. Generated knowledge prompting is a simple yet effective method that improves the performance of large-scale language models on four commonsense reasoning tasks without requiring task-specific supervision for knowledge integration or access to a structured knowledge base. It generated knowledge prompting highlighting large-scale language models as flexible sources of external expertise for improving commonsense reasoning. The knowledge used in the context is generated by a model and used in the prompt to make a prediction • Highest-confidence prediction is used Generated knowledge prompting involves (a) using few-shot demonstrations to generate question-related knowledge statements from a language model; (b) using a second language model to make predictions with each knowledge statement, and then selecting the highest-confidence prediction. Generated knowledge prompting relies on external sources of information, while chain of thought prompting relies on internal associations and connections. The first step is to generate knowledge. Below is an example of how to generate the knowledge samples: The knowledge samples are then used to create knowledge-augmented questions to get answer proposals. The highest-confidence response is selected as the final answer: ReAct ReAct (Reason + Act) is a framework where LLMs are used to generate both reasoning traces and task-specific actions in an interleaved manner. ReAct interleaves reasoning and acting to allow for greater synergy between the two. Reasoning traces help the model induces, track, and update action plans and handle exceptions, while actions allow it to interface with external sources to gather additional information. ReAct outperforms state-of-the-art baselines on various language and decision-making tasks and generates human-like task-solving. ReAct improves human interpretability and trustworthiness over methods without reasoning or acting components. In conclusion, prompt engineering is an essential technique in NLP that can help developers create more accurate and effective AI models. By following the key steps involved in prompt engineering and using the appropriate types of prompts, developers can design models that meet the needs of their users and provide a better overall user experience. TL;DR

References: